GrowAI

-

Home

-

CogDevelop2K

-

Conservation

-

Mechanical Reasoning

-

Three Mountain Task

-

Contact

Abstract

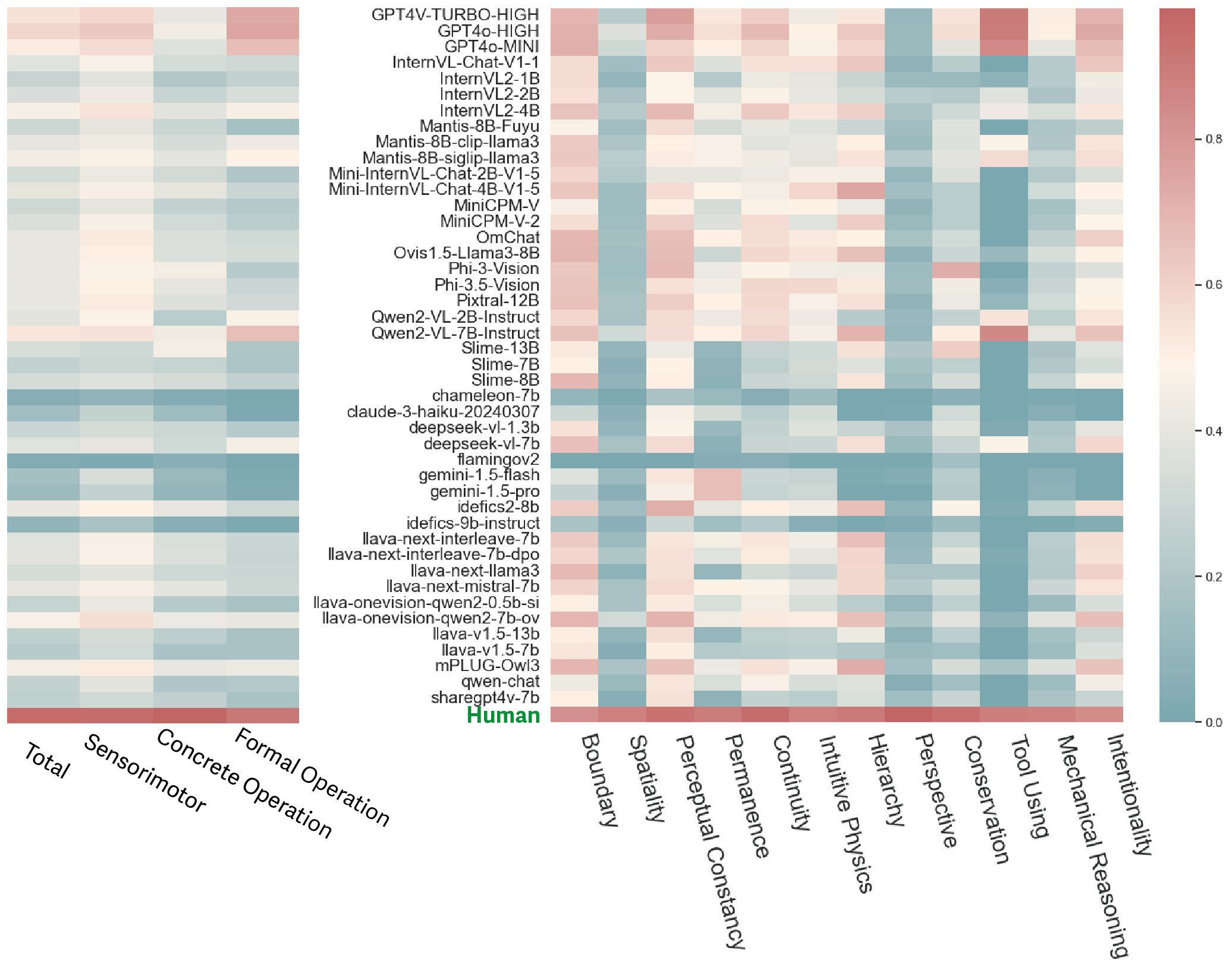

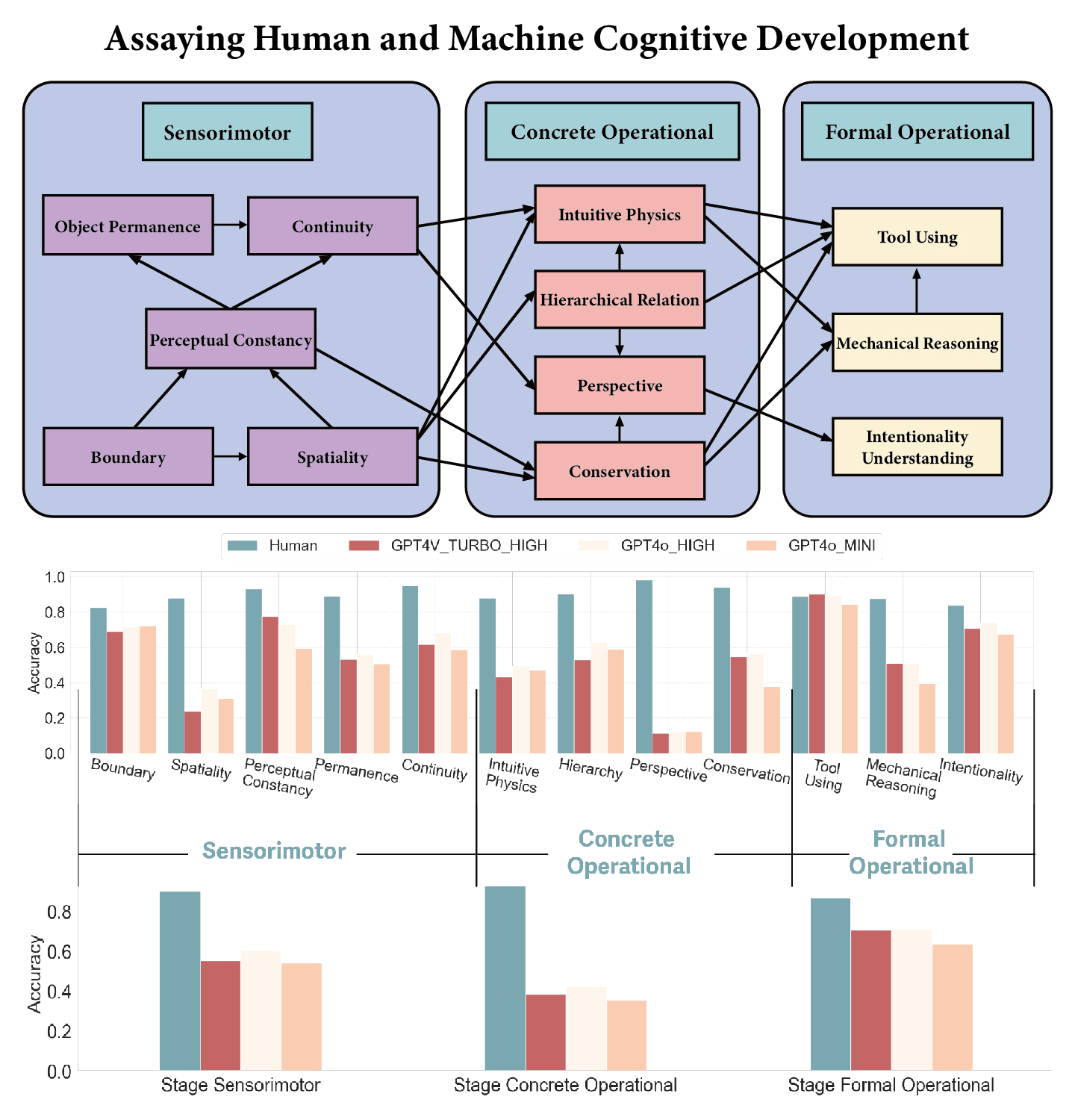

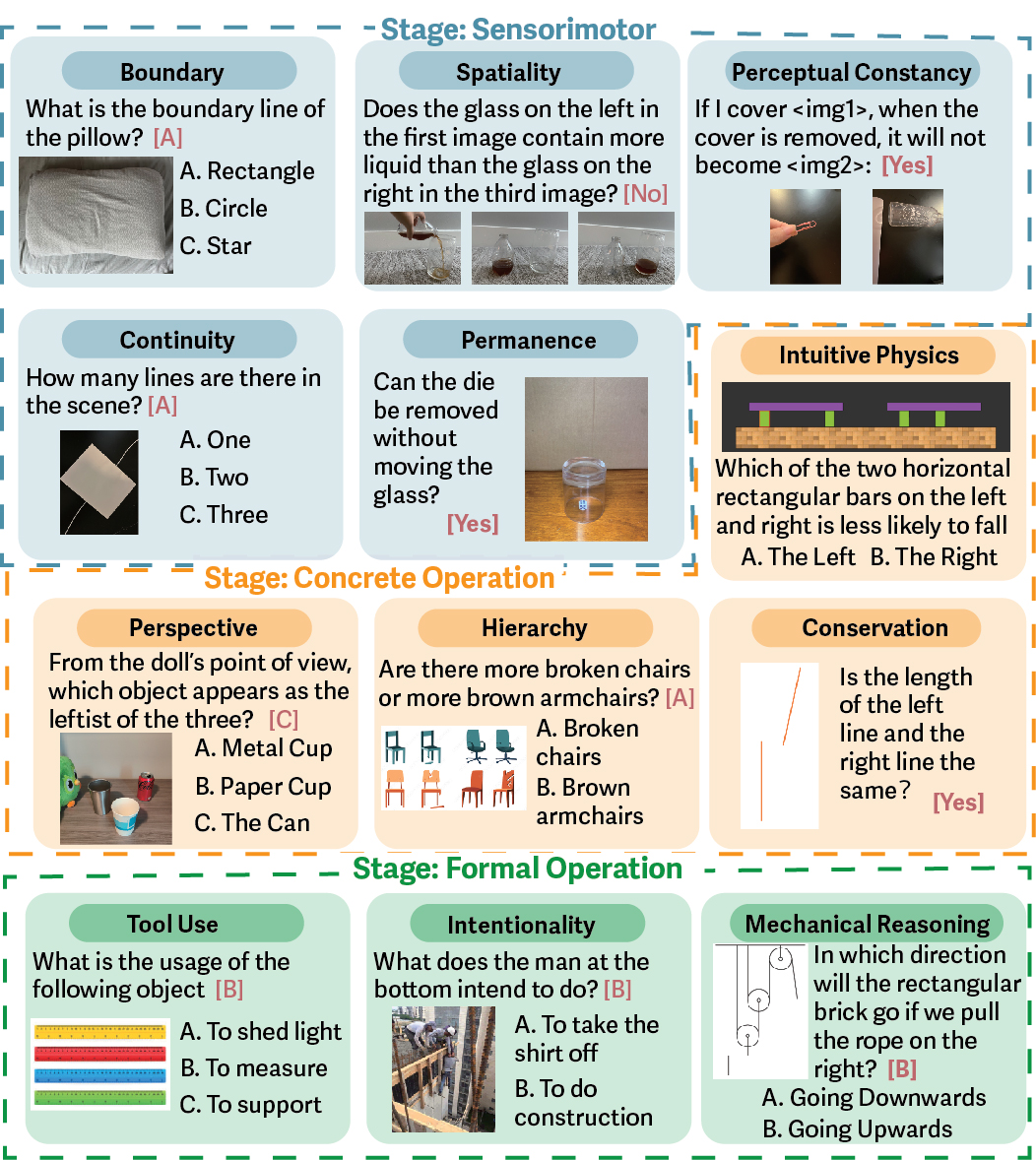

Are Multi-modal Large Language Models (MLLMs) stochastic parrots? Do they genuinely understand and are capable of performing the tasks they excel at? This paper aims to explore the fundamental basis of MLLMs, i.e. core cognitive abilities that human intelligence builds upon to perceive, comprehend, and reason. To this end, we propose CogDevelop2K, a comprehensive benchmark that spans 12 sub-concepts from fundamental knowledge like object permanence and boundary to advanced reasoning like intentionality understanding, structured via the developmental trajectory of a human mind. We evaluate 46 MLLMs on our benchmarks. Comprehensively, we further evaluate the influence of evaluation strategies and prompting techniques. Surprisingly, we observe a reversed cognitive developmental trajectory compared to humans.

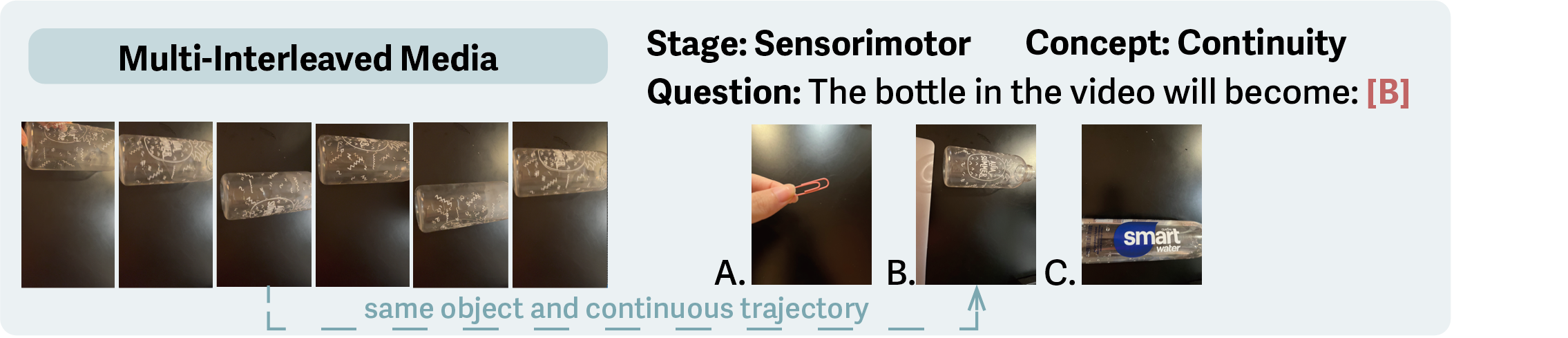

Cognitive Experiment Example

A video-image interleaved example of multi-frame questions. To correctly infer the answer, model needs to understand the question by mapping each image (co-reference) to its option letter, to understand correlation between frames (temporal understanding) and to infer the possible trajectory of the bottle (reasoning).

We built a comprehensive and exhaustive sets of cognitive exeperiments acoss three Piagetian developmental stages

We observe diverse performances across Multimodal Large Language Models